[This post is part of an ongoing challenge to understand 52 papers in 52 weeks. You can read previous entries, here, or subscribe to be notified of new posts by email]

User interfaces are hard. So hard in fact that a friend of mine recently spent 45 minutes on hold with the IRS only to be told "Sorry, we're too busy. Call back later.". He never even spoke to a real person.

We've all had to suffer through crappy user interfaces. If only there was a way for people building these things to discover potential issues before they hit users in the face.

There are, in fact, many. But in 1990 Jakob Nielsen and Rolf Molich published a paper called Heuristic Evaluation of User Interfaces that describes a cheap way of accurately finding most if not all usability problems in your interface.

Heuristic evaluation

Heuristic evaluation is essentially looking at the interface and forming an opinion about what's good or bad. Ideally you'd use a set of guidelines, but there are thousands of those, so people usually rely on experience and their own intuitions.

The authors have come up with a simplified list of nine heuristics:

- simple and natural dialogue

- speak the user's language

- minimize user memory load

- be consistent

- provide feedback

- provide clearly marked exits

- provide shortcuts

- good error messages

- prevent errors

The list came about through years of teaching and consulting on user interfaces and is particularly useful because it can be taught in a single lecture.

Empirical test of heuristic evaluation

Four experiments were performed to test the applicability of heuristic evaluation in a real world scenario. All of them follow the pattern of pick an interface, define X known problems, give the design to a bunch of people to assess, see how many problems they find.

Interestingly, there were times where the researchers had to add to their list of known problems because a test subject would find something they didn't think of. This indicates that no matter your experience, it is near impossible for a single person to bump into all usability problems.

The first experiment included ten screen dumps from a Danish videotext system, teledata, and was evaluated by 37 computer science students looking for a total of 52 known usability problems.

For the second test they designed Mantel. It was a design for a small information system working as a phone number directory accessible with a modem. Evaluators were readers of the Danish Computerworld magazine and only had access to a written specification. They were looking for 30 usability issues.

The third and fourth experiments used live systems instead of just designs. Once more the evaluators were students and their job was to look for problems in the voice response systems for Savings and Transport. Call with a phone, use a 12-key keypad to send tones at key moments. Easy.

While there was no overlap between subjects in the first two tests, they used the same evaluators with a similar background in usability (taking the authors' course) for both the third and fourth experiment. This allowed them to perform a comparison of evaluators.

Not sure if this was intentional, but the authors picked the worst type of systems. When I was a kid I hated the videotext system on our telly, extremely difficult to navigate, but the closest thing we had to the internet. And I still hate the whole "Press 1 to fuck off. Press 2 for information, maybe."

Why do we still have those?

The usability problems

Some of the usability problems found include inconsistencies in how commands work with the dial-in systems. One used an end-of-command key, the other used that same key for "return to main menu". Weird considering they both use the same equipment.

95% of the subjects also found it problematic that Mantel overwrites a user's phone-number instantly and 62% found it odd that the Transport system goes from main-menus to submenus without much indication this happened.

The exact problems don't matter as much as deciding whether they are actually valid. Is this something that a normal user would notice and struggle with?

It seems that given enough evaluators, especially if some of them are not trained, you should be able to mostly find problems that affect real people. The same problem discovered by multiple people is likely far more serious than something that only bugs a single person.

But you should keep in mind that it is impossible to find all problems for all occasions. Real users might use a system for different purposes and will hit different problems depending on what they're trying to achieve.

There is also a subset of users that will always struggle with your interface no matter what you do or how easy you make it. Just as there is a subset who will figure everything out as if by magic.

You can often notice this when a computer savvy person helps when something doesn't work. They touch the computer and everything acts exactly like it's supposed to, despite protestations that the exact same thing has been tried multiple times and never worked.

But let's not get into the whole "Friends don't let friends do tech support"

Evaluation results

The main result is that heuristic evaluation is hard. Even in the best case, evaluators barely found more than half of the problems.

But that's not too bad. Finding some problems is better than no problems, and you have to keep in mind not all evaluators will find the same set of problems. Group problem discovery rates might actually be higher.

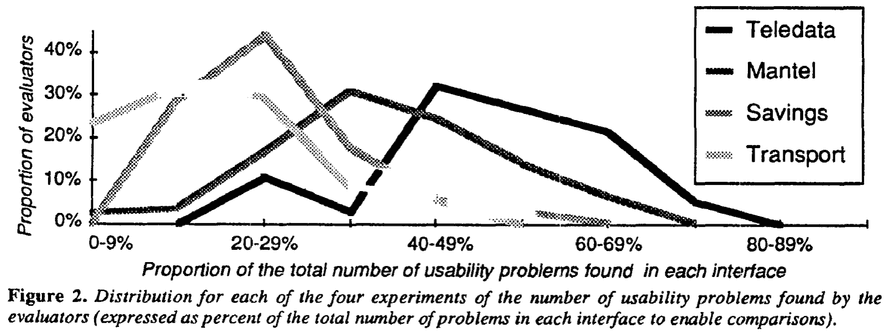

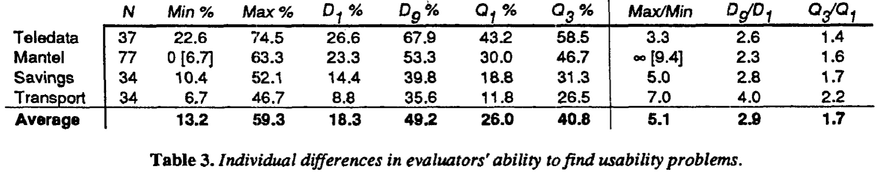

If you look at a table of individual differences in evaluator's ability to find problems, you'll see that variability is very high. The worst evaluator of Mantel found no problems, while the best found 63%, and the best evaluator over all found 74% of the problems in Teledata.

The table also shows that some systems might be easier to evaluate heuristically than others, but you can always augment heuristics with more rigorous engineering methods.

Aggregated evaluations

Looking at these results, it makes sense to aggregate evaluation results. This eliminates most false positives - usually found by a single person - and will take into account that even bad evaluators sometimes find hard problems missed by a better evaluator.

One issue with finding usability problems is that once pointed out, they become obvious to everyone. Discovering them first is the hard part.

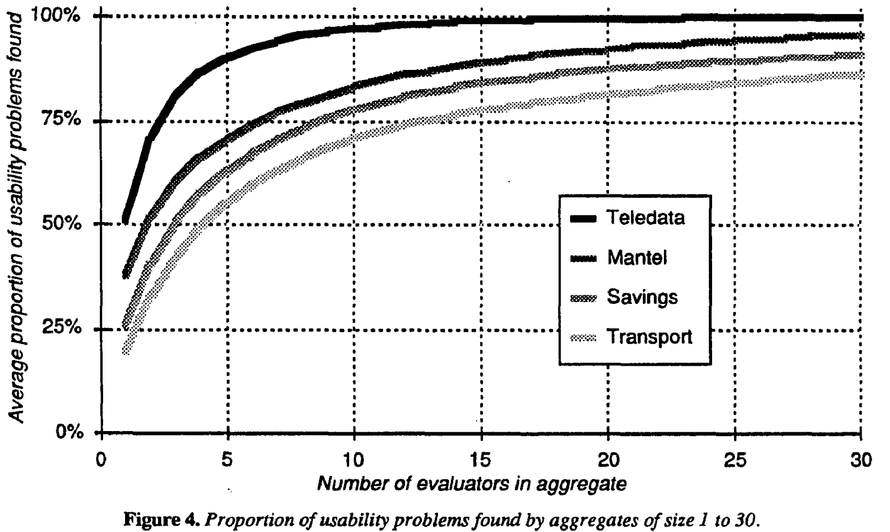

The researchers created multiple aggregate evaluations for each experiment by choosing a number of people at random. They discovered a rapid increase of discovered problems up to about 5 evaluators and diminishing returns after more than 10.

This suggests that your best bet for finding most usability problems is getting a group of seven or so people and asking them to look for problems. Much more than and you're wasting time and money. Less than five and you're missing a lot.

You are also likely to find more problems if evaluators conduct their evaluation in private lest they influence each other.

Fin

The authors conclude their study by saying that heuristic evaluation is hard, but presents a cheap way of finding problems. Especially when you can get multiple evaluators and aggregate their results.

Major advantages are: - cheap - intuitive and easy to motivate people to do - no advance planning needed - can be used early in the process

Really it seems the only disadvantage might be that sometimes problems are found without any suggestions for a fix. But that's what your UX guy is for ;)

Continue reading about Week 14, heuristic evaluation of user interfaces

Semantically similar articles hand-picked by GPT-4

- Stupid users are a myth

- Solve the problem, not a different more difficult problem

- Clever technical hackery can't solve the wrong design

- Code Review Practices for Refactoring Changes

- You can't fix the wrong abstraction

Learned something new?

Read more Software Engineering Lessons from Production

I write articles with real insight into the career and skills of a modern software engineer. "Raw and honest from the heart!" as one reader described them. Fueled by lessons learned over 20 years of building production code for side-projects, small businesses, and hyper growth startups. Both successful and not.

Subscribe below 👇

Software Engineering Lessons from Production

Join Swizec's Newsletter and get insightful emails 💌 on mindsets, tactics, and technical skills for your career. Real lessons from building production software. No bullshit.

"Man, love your simple writing! Yours is the only newsletter I open and only blog that I give a fuck to read & scroll till the end. And wow always take away lessons with me. Inspiring! And very relatable. 👌"

Have a burning question that you think I can answer? Hit me up on twitter and I'll do my best.

Who am I and who do I help? I'm Swizec Teller and I turn coders into engineers with "Raw and honest from the heart!" writing. No bullshit. Real insights into the career and skills of a modern software engineer.

Want to become a true senior engineer? Take ownership, have autonomy, and be a force multiplier on your team. The Senior Engineer Mindset ebook can help 👉 swizec.com/senior-mindset. These are the shifts in mindset that unlocked my career.

Curious about Serverless and the modern backend? Check out Serverless Handbook, for frontend engineers 👉 ServerlessHandbook.dev

Want to Stop copy pasting D3 examples and create data visualizations of your own? Learn how to build scalable dataviz React components your whole team can understand with React for Data Visualization

Want to get my best emails on JavaScript, React, Serverless, Fullstack Web, or Indie Hacking? Check out swizec.com/collections

Did someone amazing share this letter with you? Wonderful! You can sign up for my weekly letters for software engineers on their path to greatness, here: swizec.com/blog

Want to brush up on your modern JavaScript syntax? Check out my interactive cheatsheet: es6cheatsheet.com

By the way, just in case no one has told you it yet today: I love and appreciate you for who you are ❤️