[This post is part of an ongoing challenge to understand 52 papers in 52 weeks. You can read previous entries, here]

"On a scale of OMFG to WTFLOL I was like whaaaaaaat?"

That's the line that got me hooked. While casually scrolling through candidates the words OMFG and WTFLOL shined out from the sea of dry academese.

Tom Murphy VII's The First Level of Super Mario Bros. is Easy with Lexicographic Orderings and Time Travel ... after that it gets a little tricky.

I loved it.

But it was published on April 1st, reads like a giant joke and contains assurances that it's 100% real to boot. And it turns out the conference - SIGBOVIK 2013 - is an annual conference devoted to spoof research. Hmm ...

After reading the paper and watching the videos I'm convinced this is either the best thought out April fool's joke ever, or real research published in an awesome way. Either way, the nitty gritty of the paper looks believable. There's also source code and plenty of video evidence.

I'm assuming the paper is real because I want to believe!

The Gist

In this paper @Tom7 writes about an algorithm he's developed over several weekends that plays classical NES games. The software consists of two utilities: learnfun and playfun.

learnfun watches you play a game and figures out what it means to win.

playfun uses that knowledge to play the game. In some cases failing miserably, in others playing impressively well and even exploiting bugs.

The best part of Tom7's approach is that it's mathematically elegant and very stupid simple. He says so himself.

A smarter system might emulate how a human plays the game - looking at the score, lives, and looking at enemies. Instead, this system relies only on RAM snapshots and tries to make bytes go up. Doesn't really matter what they mean, just that they go up.

After being tweaked on Super Mario the approach does smashingly well on left-to-right platformers, does a decent job of Pacman, and fails miserably on Tetris. But it's smart enough to pause Tetris riiiiight before losing and never unpauses it.

Clever.

The NES

"The Nintendo Entertainment System is probably the best video game console, citation not needed.", which I agree with. But back then hardware was much slower than even the most basic computers we have today.

Based around an 8-bit processor running at 1.79MHz (the latest iPhone's got two 1,500MHz processors) and with just 2048 bytes of RAM the NES is relatively easy to emulate on modern computers. In fact, this whole paper is based on the idea that handling NES's memory footprint as a data point is possible.

As a direct result of these limitations, NES programs use fixed memory locations for most things. For instance, Super Mario's lives are always stored at address 0x75F.

Tom7 used the FCEUX emulator, which let him simulate inputs, play at speeds faster than native hardware allows and gave him the ability to record user inputs and RAM snapshots.

What is "win"?

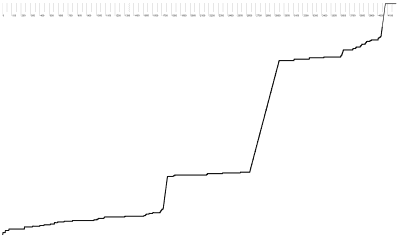

The central idea of Tom7's approach is that certain memory locations contain interesting data about the player's progress. But looking at just these locations going up would be too naive.

You can go from level 1-4 to level 2-1, which makes a byte go down and another byte go up. So we're going to look at lexicographic orderings and make sure those go up instead. Lexicographic orderings are mathematically beautiful and also generalize to multi-byte sequences (highest 1 byte score would be 255).

A lexicographic ordering is essentially a pair of values (w1, l1) that is smaller than (w2, l2) when w1 equals w2 and l1 < l2 or w1 < w2.

To find the objective function playfun has to go through our recording and look for maximal tight valid lexicographic orderings in the memory snapshots. Now I'll be perfectly honest with you and admit I'm not entirely certain what those are.

But on "a scale from simple to fancy the algorithm for finding them is a 3". The algorithm uses a list of locations and memories, looks for locations where memories differ and uses those as prefixes to find pairs of locations that globally go up as lexicographic orderings.

The orderings are tight when every location participates in the ordering at least once, and maximal when you can't find a longer ordering.

In practice we ignore all RAMs until the first player input and slice the recording to avoid problems with momentarily decreasing values. The whole memory output is sliced every few frames with the idea of finding objective functions that increase steadily and don't get confused by local noise.

To further reduce the influence of randomness and the arbitrary slicing, objective functions are weighted by how far away from ideal they are. The values of each objective function's memory locations are ordered lexicographically and duplicates removed to produce a vector.

This vector's value fraction is calculated as i/|V| where i is the lowest index where the vector is less than or equal to Vi, which gives us a chance to compare objectives on a global scale.

We then weight them with VF(Mm) - VF(M0) where M's are memory slices.

Learning how to play

Now that playfun knows what to aim for, it needs to know what to do. Generally speaking, playfun will be a search function that finds the optimal sequence of inputs to satisfy the objective functions from before.

The simplest approach is trying everything and seeing what works. But the search space at each frame is 28 (8 inputs), which makes it far too big. The video above shows you that this approach just makes Mario jump up until he dies of old age.

But hey, the music counter always went up, so surely playfun was winning, right?

A better approach is taking motifs of 10 keystrokes from player inputs and weighting them by how often they appear in the sequence. "On a scale from gravitational constant to pulled it out of my arse, this is an 8.", but 10 works well enough so just roll with it.

Playing naively

To make this paper possible Tom7 had to tweak the FCEUX emulator to allow deeper integration, which isn't very interesting. The end result is the playfun program, which spends most of its CPU time on emulating NES code and outputs a frame by frame video of the gameplay.

It currently needs an hour to produce 16 seconds of gameplay.

As mentioned, the first approach was finding the best scoring keypress to play on each frame, which at best makes Mario twitch in place and die of old age.

A better approach was looking 10 frames into the future and using the best scoring 10-keystroke motif. It's rare for a single keystroke to make much of a difference in a single frame anyway.

But Mario still only avoids death by accident, insists on running backwards, and can't jump high enough for the bigger pipes. Those require a consistent jump that lasts some 40 to 50 frames.

Using time travel

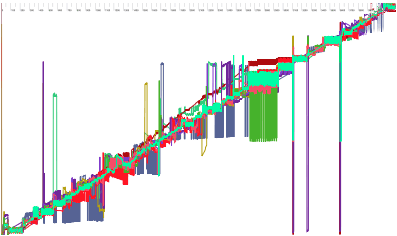

A much better approach is looking into the future and seeing what happens.

Running some 30 to 50 frames into the future and trying random sequences of motifs gives Mario a chance to avoid danger. Just give motifs that eventually end in death a very low score and Mario will no longer run into danger willy-nilly and only notice he's moving too fast to stop after it's too late.

But he must also seek adventure. Otherwise the winning move is waiting on the first square and letting the music timer advance, satisfying the game objective "Make numbers in RAM go up".

For that we use the same approach: look into random future, find the one with the best outcome.

Now we have two bounds, lowest and highest, of what we can achieve in the next few frames. How do you find the stable future that falls somewhere in between and has the highest likelihood of doing well in the long term?

Keeping some 40 of these random futures in memory, then picking which to replay for the next 10 frames according to a weight of how good they are, seems to work well. These futures are extended with more random motifs when they become too short - later replaced with a mutation function to avoid too much randomness - the worst futures are kicked out and replaced with new random futures, and most importantly, the motifs are reweighed according to what seems to work.

This means our search function will eventually become constrained only to things that worked in the past and are likely to work again.

Mario finishes level 1-1 like it's nothing, then gets stuck in a local maximum on level 1-2 and dies of old age.

Backtracking

Mario gets stuck because coins are better than enemies and once you're up on the ledge the only way to make RAMs grow all to wait out the music counter and those tiny scrolls. This is a local maximum.

The long-term better approach is jumping back down and continuing the game.

To achieve backtracking the algorithm saves a checkpoint every once in a while, then every other once in a while stops what it's doing and finds the last checkpoint that is at least some distance away. The sequence between then and now is called improveme.

Then Mario resets to the beginning of improveme, includes it in the replacement futures, and generates some other replacements based on improveme and whatever futures it was already thinking about. Then it pretends replacements are the available futures and plays normally, but doesn't update motifs or real futures.

The best available replacement is then slotted in instead of improveme. If the original sequence happens to be best, backtracking does nothing.

Replacement futures are generated in four ways:

- random - just a random sequence of inputs as long as

improveme - dualization - reverses

improvemeso that up becomes down, left becomes right, or randomly reverses sections of the sequence; introduces variety - ablation - removes a random input for the whole sequence; jumping might be slowing us down or something

- chop - randomly chops parts of

improvemeout as long as the new version is better than the old

This seemed to work, but turns out it only helped through slightly consistent luck. Tom7 discovered a bug in his objective weighting code while writing the paper for SIGBOVIK. Fixing it was an immediate breakthrough and Mario started playing like a champ.

Maybe all the fancy search stuff wasn't necessary after all, but we'll never know because watching Mario play is more fun than finding out which parts of the algorithm are safe to remove.

Performance improvements

When you have an algorithm that takes an hour to calculate 16 seconds of output, you might want a faster algorithm

Most of the time is spent emulating NES code - 500µs per frame - and not much can be done about that. Instead, we can avoid calculating new frames.

Our environment is completely deterministic, so whenever we want to repeat an input at the same memory state, we can read the result from memory in a fraction of the time it takes to recalculate it. Because modern computers are so much better than the NES, we can store some 32 million memory snapshots with a bit of compression and cutting out bytes that never change.

Compression ended up taking too much time and our memory is big enough to handle uncompressed snapshots.

The more interesting part was MARIONET, a networked version of playfun that utilizes multiple cores and potentially multiple computers to score futures for Mario to run.

Tom7 used a rather straightforward approach to multiprocessing via TCP/IP: You have a master process that handles the outside part of the loop, makes sure to always play the best possible future and keeps potential futures stored.

But the inner loop, uses helper processes that are given a memory state and a potential future to check against known motifs. The main process always waits for everyone to finish and that's pretty much it, you can use ready-made libraries for all of this stuff.

This managed to utilize all 6 cores of Tom7's computer fully. He mentions it kept the bedroom warm.

Results

The result is a system that's able to beat worlds 1-1 and 1-2 of Super Mario Bros. every time, then jumps into the first pit in world 1-3.

It does very well on some other games, and kind of poorly on some other games still. The paper takes a few pages to describe these results, but I think you should just look at the video. More fun that way.

As authors are wont to do, Tom7 promises he will do more stuff as Future Work™. Some venues to explore include multiplayer games, better search methods, improving the input model and reducing the number of magic constants. Let's hope he gets around to it.

Continue reading about Week 2: Level 1 of Super Mario Bros. is easy with lexicographic orderings and

Semantically similar articles hand-picked by GPT-4

- Eight things to know about LLMs

- Why I will always suck at programming [Grega Stritar]

- Livecoding #38 - A faux AI that writes JavaScript

- Science Wednesday: Towards a computational model of poetry generation

- Week 11, Searching the Internet for evidence of time travelers

Learned something new?

Read more Software Engineering Lessons from Production

I write articles with real insight into the career and skills of a modern software engineer. "Raw and honest from the heart!" as one reader described them. Fueled by lessons learned over 20 years of building production code for side-projects, small businesses, and hyper growth startups. Both successful and not.

Subscribe below 👇

Software Engineering Lessons from Production

Join Swizec's Newsletter and get insightful emails 💌 on mindsets, tactics, and technical skills for your career. Real lessons from building production software. No bullshit.

"Man, love your simple writing! Yours is the only newsletter I open and only blog that I give a fuck to read & scroll till the end. And wow always take away lessons with me. Inspiring! And very relatable. 👌"

Have a burning question that you think I can answer? Hit me up on twitter and I'll do my best.

Who am I and who do I help? I'm Swizec Teller and I turn coders into engineers with "Raw and honest from the heart!" writing. No bullshit. Real insights into the career and skills of a modern software engineer.

Want to become a true senior engineer? Take ownership, have autonomy, and be a force multiplier on your team. The Senior Engineer Mindset ebook can help 👉 swizec.com/senior-mindset. These are the shifts in mindset that unlocked my career.

Curious about Serverless and the modern backend? Check out Serverless Handbook, for frontend engineers 👉 ServerlessHandbook.dev

Want to Stop copy pasting D3 examples and create data visualizations of your own? Learn how to build scalable dataviz React components your whole team can understand with React for Data Visualization

Want to get my best emails on JavaScript, React, Serverless, Fullstack Web, or Indie Hacking? Check out swizec.com/collections

Did someone amazing share this letter with you? Wonderful! You can sign up for my weekly letters for software engineers on their path to greatness, here: swizec.com/blog

Want to brush up on your modern JavaScript syntax? Check out my interactive cheatsheet: es6cheatsheet.com

By the way, just in case no one has told you it yet today: I love and appreciate you for who you are ❤️