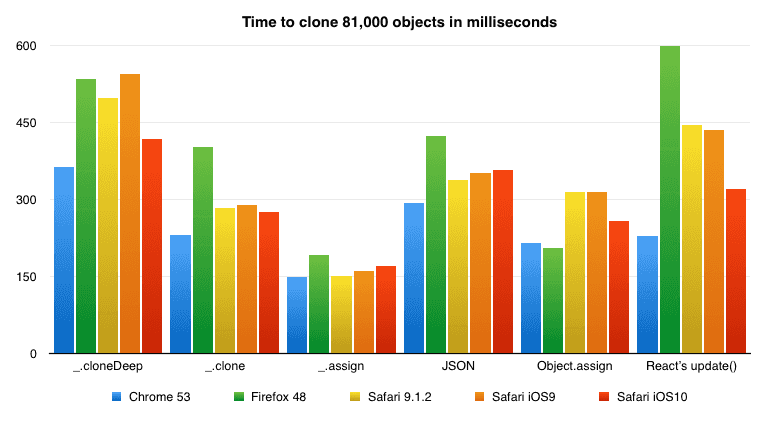

Look at those colors! Aren’t they shiny?

They’re super shiny (unless you’re color blind), but what do they mean? I’m glad you asked. That’s a speed comparison chart of 6 ways to clone JavaScript objects, run in 5 browsers, on 2 devices: my laptop and my iPhone 5SE.

You can try the benchmark yourself:

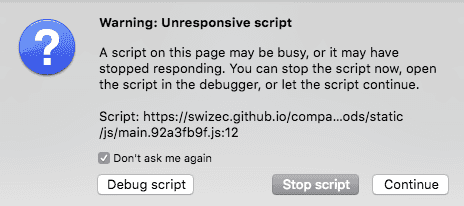

>Click here. < I’d make an iframe, but it freezes my browser for many seconds at a time. It even freezes the CSS animation on that React logo.

Dangerous business those benchmarks.

Firefox is the only browser that decides something weird is going on and throws a warning. Everyone else happily blocks JS, CSS, and UI.

Now, is this benchmark fair? I don’t know. Running benchmarks on a computer that’s doing a bunch of other stuff is never really fair. Maybe a different tab just tried to do something, or Spotify downloaded a song, or Dropbox ran a metadata update on my entire hard drive.

A bunch of things can affect these results. That’s why you can run it yourself. But I did my best to ensure fairness as much as I could.

- Each test runs alone, asynchronously

- Each test is re-run 20-times

- Each test uses the same source data

- Each test produces the same deep-ish cloned dataset

I say “deep-ish” because we’re cloning an array of some 81,000 objects. The objects are shallow, which means we can cut corners.

const experiments = {

"lodash _.cloneDeep": _.cloneDeep,

".map + lodash _.clone": (arr) => arr.map((d) => _.clone(d)),

".map + lodash _.assign": (arr) => arr.map((d) => _.assign({}, d)),

"JSON string/parse": (arr) => JSON.parse(JSON.stringify(arr)),

".map + Object.assign": (arr) => arr.map((d) => Object.assign({}, d)),

".map + React's update()": (arr) => arr.map((d) => update({}, { $merge: d })),

};

We use _.cloneDeep without understanding context. This is a little bit unfair because it tries to do too much. We run _.clone, _.assign, Object.assign, and React’s update in a loop. They benefit from not trying to work in the general case. JSON.parse/stringify is on the same level as _.cloneDeep: naïve, complete, works for anything.

I’m gobsmacked that for datasets this big, you’re better off converting to JSON and back than using Lodash’s cloneDeep function. I have no idea how that’s even possible. Maybe JSON benefits from an implementation detail deep in the engine?

But then why is _.assign faster than Object.assign? They both make a shallow copy of an object, but Object.assign is a language feature, and _.assign is implemented in pure JavaScript.

I think … how else? I hope @jdalton can shed some light on this.

You can see the entire test runner on Github here. There are a few comments, but the interesting bit is this runner function. It ensure fairness by isolating timing to only the cloning method.

runner(name, method) {

let data = this.state.data;

const times = d3.range(0, this.N).map(() => {

const t1 = new Date();

let copy = method(data);

const t2 = new Date();

return t2 - t1;

});

let results = this.state.results;

results.push({name: name,

avg: d3.mean(times)});

this.setState({results: results});

}

The runner itself is called asynchronously via setTimeout(foo, 0), and the async library ensures tests don’t happen in parallel. Inside runner, we iterate through N = 20 indexes, take timestamp, perform clone, take another timestamp, and construct an array of time diffs. Then we use d3.mean to get the average and add it to this.state.results with setState.

It runs in React because I’m lazy and create-react-app is the quickest way to set everything up :)

Maybe there’s some unfairness in how Babel compiles this code? That’s possible ?

My conclusion is this: Use the appropriate algorithm for your use-case, and run your code in Chrome.

Continue reading about Firefox is slow, Lodash is fast

Semantically similar articles hand-picked by GPT-4

- Object clone performance: preliminary results

- Chrome's console.log is the slowest

- Animating 2048 SVG nodes in React, Preact, Inferno, Vue, Angular 2, and CycleJS – a side-by-side comparison

- Building an interactive DOM benchmark, preliminary results

- Screw web performance, just wait a little 😈

Learned something new?

Read more Software Engineering Lessons from Production

I write articles with real insight into the career and skills of a modern software engineer. "Raw and honest from the heart!" as one reader described them. Fueled by lessons learned over 20 years of building production code for side-projects, small businesses, and hyper growth startups. Both successful and not.

Subscribe below 👇

Software Engineering Lessons from Production

Join Swizec's Newsletter and get insightful emails 💌 on mindsets, tactics, and technical skills for your career. Real lessons from building production software. No bullshit.

"Man, love your simple writing! Yours is the only newsletter I open and only blog that I give a fuck to read & scroll till the end. And wow always take away lessons with me. Inspiring! And very relatable. 👌"

Have a burning question that you think I can answer? Hit me up on twitter and I'll do my best.

Who am I and who do I help? I'm Swizec Teller and I turn coders into engineers with "Raw and honest from the heart!" writing. No bullshit. Real insights into the career and skills of a modern software engineer.

Want to become a true senior engineer? Take ownership, have autonomy, and be a force multiplier on your team. The Senior Engineer Mindset ebook can help 👉 swizec.com/senior-mindset. These are the shifts in mindset that unlocked my career.

Curious about Serverless and the modern backend? Check out Serverless Handbook, for frontend engineers 👉 ServerlessHandbook.dev

Want to Stop copy pasting D3 examples and create data visualizations of your own? Learn how to build scalable dataviz React components your whole team can understand with React for Data Visualization

Want to get my best emails on JavaScript, React, Serverless, Fullstack Web, or Indie Hacking? Check out swizec.com/collections

Did someone amazing share this letter with you? Wonderful! You can sign up for my weekly letters for software engineers on their path to greatness, here: swizec.com/blog

Want to brush up on your modern JavaScript syntax? Check out my interactive cheatsheet: es6cheatsheet.com

By the way, just in case no one has told you it yet today: I love and appreciate you for who you are ❤️