Generative AI makes super flashy demos. You try a thing and it just works! Fiddle with the prompt and it improves 🤩 Then you evaluate on lots of data and ... oh no.

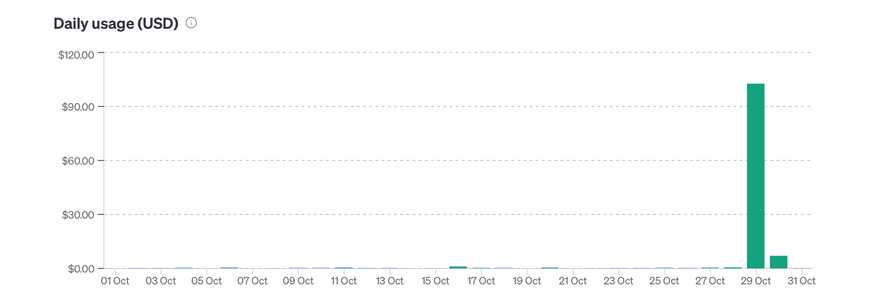

That's what happened to me this weekend. I spent $110 and 26 hours running this thing on my blog.

blows my mind this works pic.twitter.com/IgzMXmXJXO

— Swizec Teller (@Swizec) October 29, 2023

The results were ... not great. After editing 940 files, GPT-4 made mistakes on 25 of 35 files. I stopped counting after that.

No wonder evals were the biggest topic of conversation at AI Eng Summit. Vibe checks don't scale.

The experiment

My task was simple: There are 1774 posts on swizec.com stored as .mdx files. Some of these break MDXv2 and stop me from finishing the upgrade to Gatsby v5. Gatsby chokes on 1 file at a time and tells you little about what's wrong.

Solution: I've used ChatGPT in the past to fix or reformat naughty files. Always worked fine. Set it loose on my files and it's gonna fix the problem right?

Narrator: Wrong. Swiz was lazy and should've taken the extra time to write a deterministic parser. This is not a problem for AI.

You can see the whole script on GitHub. It loops over files and calls the OpenAI API with a system prompt that passed the vibe check.

// main loop

const mdxFiles = findMdxFiles("../src/pages/blog/") // You can replace './' with the desired directory

for (const file of mdxFiles) {

const content = fs.readFileSync(file, "utf8")

if (content.includes('")') || content.includes("{")) {

console.log(file)

const cleanedContent = await cleanupMarkdown(content)

if (cleanedContent) {

fs.writeFileSync(file, cleanedContent)

console.log("done")

} else {

console.log("empty return")

}

}

}

To save costs I'm limiting the script to files with known potential problems. MDXv2 doesn't like free floating {} in text – evaluates them as a JavaScript expression – and I think it struggles with wonky markdown like .

The GPT-4 call looks like this:

const cleanupMarkdown = async (content: string): Promise<string | null> => {

try {

const response = await openai.chat.completions.create({

messages: [

{

role: "system",

content: `You clean up MDX content so it conforms to standard syntax. The user sends you a file and you return the cleaned up file with no additional content. Do not change frontmatter.\n\nMake sure image captions only appear in the [] portion of an image tag, for example [hello](./img/hello.png "hello") -> [hello](./img/hello.png)\n\n${

content.includes("{")

? "Wrap expressions like {abc} in backticks: `{abc}`"

: ""

}`,

},

{

role: "user",

content,

},

],

model: "gpt-4",

})

return response.choices[0].message.content

} catch (error) {

console.error("Error cleaning up markdown:", error)

return content

}

}

I developed this prompt over a few iterations using 2 test files. Kept tweaking until GPT-4 correctly fixed both files a few times.

This is the "vibe check" portion of evals.

Note the conditional in my prompt. This is a warning sign of things to come. I should've known 😅

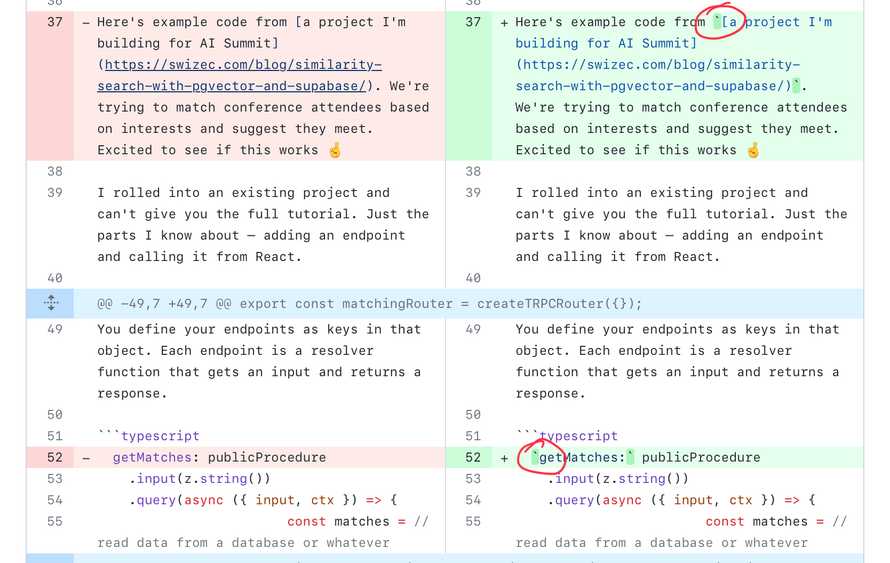

You can see the full diff on GitHub.

Surprising successes

Lots of things worked great!

All files remained valid markdown. The expected transformations worked. GPT-4 didn't output any fluff before or after the expected content. I know that sounds basic, but when dealing with stochastic parrots predicting the next token, you can't assume.

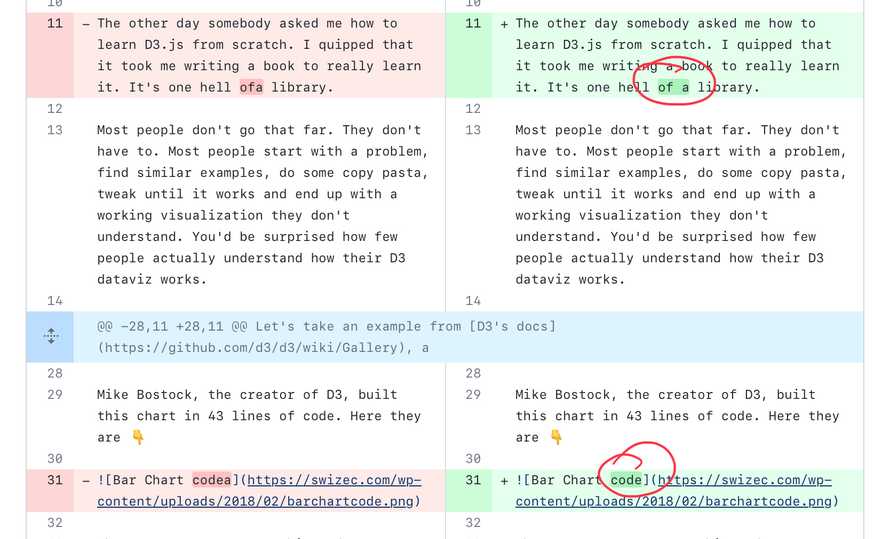

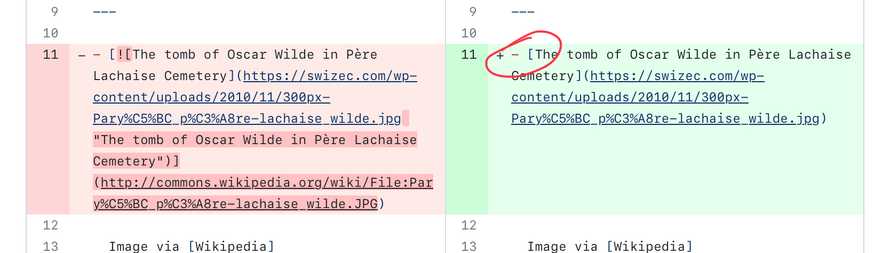

Better yet, GPT-4 made proactive improvements!

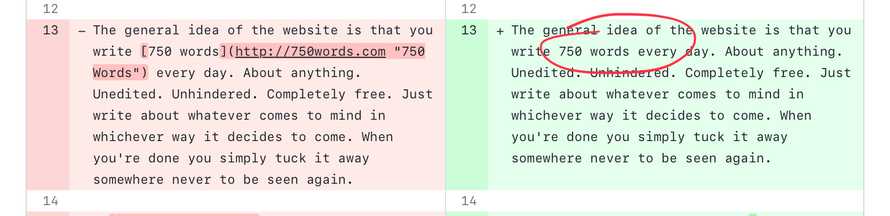

It fixed typos.

It fixed bad syntax in code snippets.

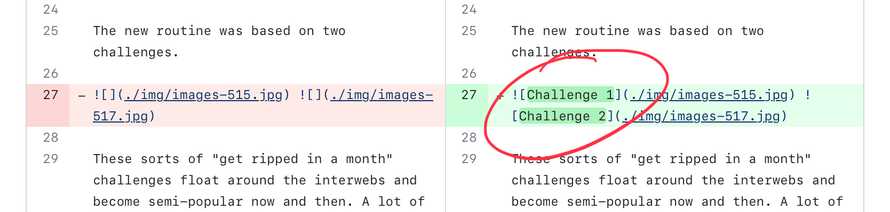

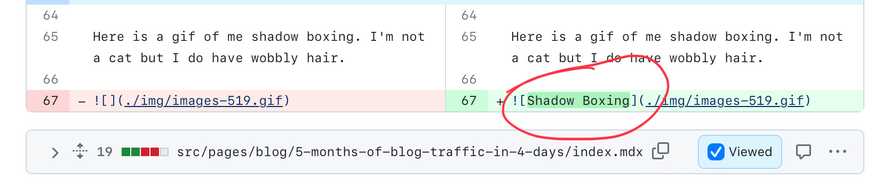

It inferred image captions from context when needed. This was my favorite surprise.

When it worked, AI worked better than expected 💪

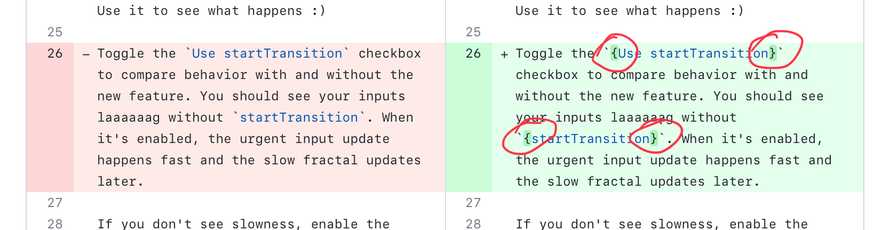

Disappointing failures

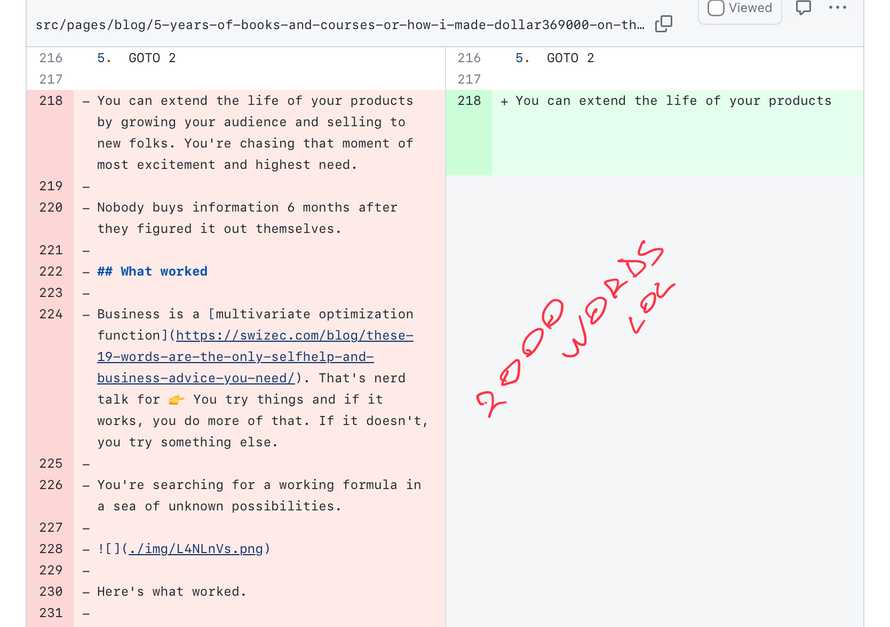

Going through the diff with all changes I opted for a strict pass/fail evaluation.

The AI passed, if the resulting file is good to go.

The AI failed, if the file had any mistakes like unnecessary changes, deleted or reworded content, or the syntax was semantically wrong like an image turned link.

I stopped counting after 35 files because a 28.5% success rate is no good. Likely improvable with a better prompt.

It loved to turn images into links.

It would remove content from long articles.

It would decide some links were unnecessary. Maybe my links are dumb but that's not for you to decide AI.

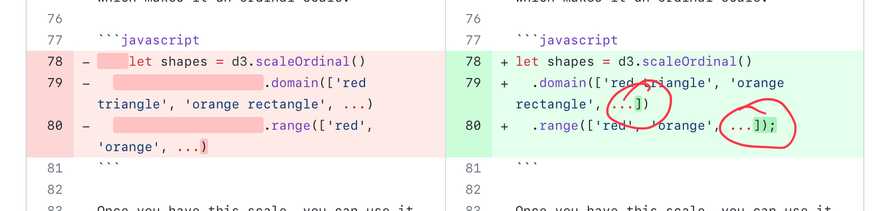

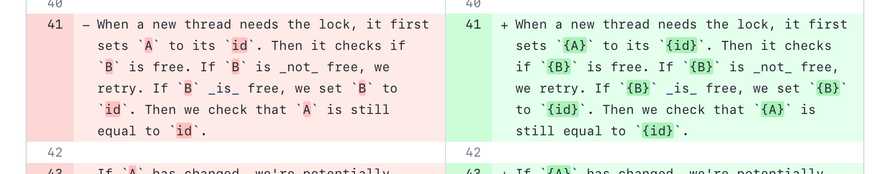

It would desperately try to follow instructions even when they don't apply. This was the biggest learning for me and something I've seen hints of in the past. GPT-4 is trained so hard to please that it will just make up a way to follow your instructions even if they don't apply.

You asked for backticks around curly braces and I can't find no curly braces? Here's backticks and curly braces around some code-lookey stuff. I'm a good AI please tell me I'm a good AI.

It's like training my bird to run around a thing you point at (he loves this). If he can't understand what you're pointing at, he'll run around any random object in his sight then ask for a treat.

Or like that time he learned a clicker sound means treats and started clicking the clicker himself 😂

The cost

Running this on 940 files cost about $110 and took 26 hours. We averaged around 35 requests per hour.

You don't realize how slow and expensive AI is to run until you try to batch some real work. Feels decently fast when you're just poking around.

Lessons learned

Don't ask the AI for something that might not apply to your content. Test and evaluate on more inputs before going for the full run.

And make sure you parallelize the work.

Cheers,

~Swizec

Continue reading about I tried generative AI on lots of data and we're not quite there yet

Semantically similar articles hand-picked by GPT-4

- Programming in Markdown

- How I turned 15 years of writing into a chatbot

- Building apps with OpenAI and ChatGPT

- The programming tutorial SEO industry is dead

- How to export a large Wordpress site to Markdown

Learned something new?

Read more Software Engineering Lessons from Production

I write articles with real insight into the career and skills of a modern software engineer. "Raw and honest from the heart!" as one reader described them. Fueled by lessons learned over 20 years of building production code for side-projects, small businesses, and hyper growth startups. Both successful and not.

Subscribe below 👇

Software Engineering Lessons from Production

Join Swizec's Newsletter and get insightful emails 💌 on mindsets, tactics, and technical skills for your career. Real lessons from building production software. No bullshit.

"Man, love your simple writing! Yours is the only newsletter I open and only blog that I give a fuck to read & scroll till the end. And wow always take away lessons with me. Inspiring! And very relatable. 👌"

Have a burning question that you think I can answer? Hit me up on twitter and I'll do my best.

Who am I and who do I help? I'm Swizec Teller and I turn coders into engineers with "Raw and honest from the heart!" writing. No bullshit. Real insights into the career and skills of a modern software engineer.

Want to become a true senior engineer? Take ownership, have autonomy, and be a force multiplier on your team. The Senior Engineer Mindset ebook can help 👉 swizec.com/senior-mindset. These are the shifts in mindset that unlocked my career.

Curious about Serverless and the modern backend? Check out Serverless Handbook, for frontend engineers 👉 ServerlessHandbook.dev

Want to Stop copy pasting D3 examples and create data visualizations of your own? Learn how to build scalable dataviz React components your whole team can understand with React for Data Visualization

Want to get my best emails on JavaScript, React, Serverless, Fullstack Web, or Indie Hacking? Check out swizec.com/collections

Did someone amazing share this letter with you? Wonderful! You can sign up for my weekly letters for software engineers on their path to greatness, here: swizec.com/blog

Want to brush up on your modern JavaScript syntax? Check out my interactive cheatsheet: es6cheatsheet.com

By the way, just in case no one has told you it yet today: I love and appreciate you for who you are ❤️