This post is summarized from Chapter 3 of Ruli Manurung's An evolutionary algorithm approach to poetry generation from 2003 - it is essentially 10 years old research from a fast moving field of science. However, these are core principles and techniques; a casual perusal of wikipedia indicates they are still valid.

If you know of something new and spectacular, I'd love to know.

Natural Language Generation

So what is natural language generation anyway? The only thing everyone can agree on is that the algorithm should take some manner of input and output a sensible text that a human can read and understand.

Formally defined, a NLG system should accept a <k,c,u,d> tuple, where k is the knowledge source, c is the communicative goal, u is the user model and d is the discourse model. Essentially this means that "You know K and you want to say C to U, using the style of D" ... remember back to your high school days - before writing an essay the teacher would tell you exactly this.

The output ... well that's easier, it should make sense, fulfill the communicative goal and look like it was written at least by a trained monkey.

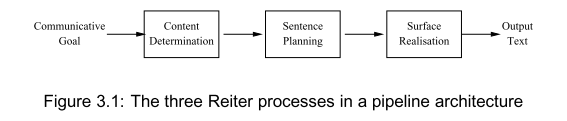

A NLG system usually involves three processes:

- Content determination - what you're going to say, based on knowledge, communicative goal and user's expectations

- Sentence planning - how are you going to say it. Remember, semantically sane!

- Surface realisation - the final output, which specific words to use, do sentences follow each other nicely and so on. You could call this style _or _flow.

Traditional architecture

Traditionally this has been approached by discretely implementing the three stages and assembling them into a pipeline of some sort.

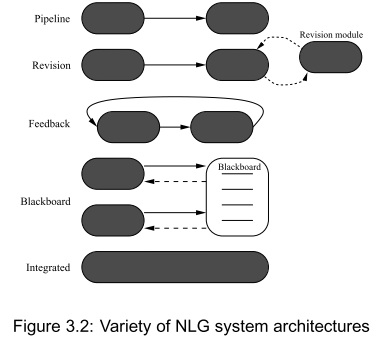

But what if you don't have a clear communicative goal and just want to say something interesting about a subject? Or perhaps a later stage might find a better solution that has been disallowed by an earlier stage.

A number of different architectures have emerged to combat these problems:

The revision approach combats problems by iteratively fixing each stage in hopes of finding something better, feedback simply feeds findings from later stages back into earlier stages, which can open up options that would otherwise be pruned away, blackboard is particularly interesting because it uses a common space where results from different stages are posted so every stage has access to what's already out there.

But without a clear communicative goal or end user in mind, the integrated approach looks most promising.

NLG as a search problem

One way to approach natural language generation in an integrated manner is by posing it as a search problem - you have a search space of possible solutions, your job is merely to find the best.

This can be done in many ways:

- hillclimbing - take a random spot on the map. With each step, consider all possible moves and move in the best direction. Hope you don't get trapped in a local maximum

- systemic search- go through all possible solutions and find the best one. Problem with this approach is that it can take a while to finish, but you always get the most optimal solutionFour main ways exist to implement this: chart generation (use charts to define the search space and go from there), truth maintenance systems (have assumptions, make sure they stay true), "redefining the search problem" (a lot of invalid choices can be pruned out based on linguistic knowledge, which helps with the explosive search space size), constraint logic programming (everyone who's done a prolog course has seen this I think)

- stochastic search - this is my favorite approach since it involves a bunch of interesting algorithms. The basic idea is that you can define a set of constraints and then "guess" solutions based on those constraints. But since each constraint is probabilistic, you have a lot of flexibility in coming up with solutions.

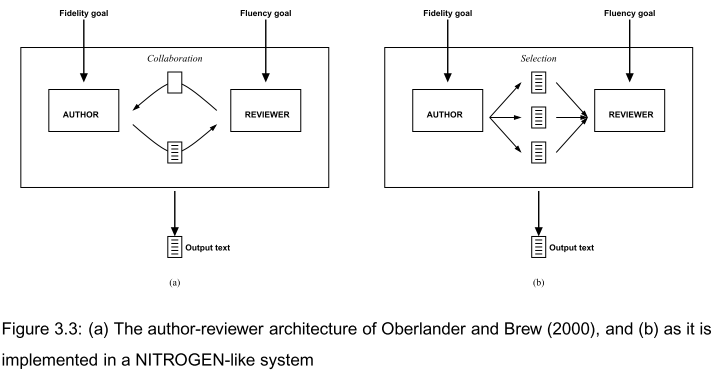

Overgeneration and ranking

This approach is based on the model of an author and a reviewer. The author continuously outputs a bunch of plausible solutions that the reviewer then ranks on viability and finally picks one to present to the user.

The beauty of this approach lies in the fact that each part of the system only has to concern itself with what it does best, while they still collaborate so you aren't trapped in a pipeline architecture.

Opportunistic planning

Opportunistic planning is an approach that works best when you don't have a clearly defined communicative goal - so how do you know when you've found the solution?

This approach was developed for a system that produced description labels for items in a museum. From what I understand users could virtually click around a collection of jewelry, giving the NLG system a simple goal of tell me something interesting about this.

Without any clear goals and users, even without much advance knowledge of what the system will be talking about, the only viable approach is generating text based on opportunity, like a human guide would.

The crowning moment is when it can connect subsequent items into something like "...and it was work like this which directly inspired work like the Roger Morris brooch on the stand which we looked at earlier”

Poetry?

Poetry suffers from a lot of the problems other NLG systems have already had to tackle - a unity of content and form, lack of clearly defined goals etc.

Therefore the best approach we can take is some combination of opportunistic planning, which gives us the ability to have lightbulb moments while writing poetry, and stochastic search, which gives us the needed flexibility in the face of many constraints without any goals.

Continue reading about Natural Language Generation system architectures

Semantically similar articles hand-picked by GPT-4

- Science Wednesday: Towards a computational model of poetry generation

- Comparing automatic poetry generators

- Science Wednesday: Defining poetry

- Evolving a poem with an hour of python hacking

- Eight things to know about LLMs

Learned something new?

Read more Software Engineering Lessons from Production

I write articles with real insight into the career and skills of a modern software engineer. "Raw and honest from the heart!" as one reader described them. Fueled by lessons learned over 20 years of building production code for side-projects, small businesses, and hyper growth startups. Both successful and not.

Subscribe below 👇

Software Engineering Lessons from Production

Join Swizec's Newsletter and get insightful emails 💌 on mindsets, tactics, and technical skills for your career. Real lessons from building production software. No bullshit.

"Man, love your simple writing! Yours is the only newsletter I open and only blog that I give a fuck to read & scroll till the end. And wow always take away lessons with me. Inspiring! And very relatable. 👌"

Have a burning question that you think I can answer? Hit me up on twitter and I'll do my best.

Who am I and who do I help? I'm Swizec Teller and I turn coders into engineers with "Raw and honest from the heart!" writing. No bullshit. Real insights into the career and skills of a modern software engineer.

Want to become a true senior engineer? Take ownership, have autonomy, and be a force multiplier on your team. The Senior Engineer Mindset ebook can help 👉 swizec.com/senior-mindset. These are the shifts in mindset that unlocked my career.

Curious about Serverless and the modern backend? Check out Serverless Handbook, for frontend engineers 👉 ServerlessHandbook.dev

Want to Stop copy pasting D3 examples and create data visualizations of your own? Learn how to build scalable dataviz React components your whole team can understand with React for Data Visualization

Want to get my best emails on JavaScript, React, Serverless, Fullstack Web, or Indie Hacking? Check out swizec.com/collections

Did someone amazing share this letter with you? Wonderful! You can sign up for my weekly letters for software engineers on their path to greatness, here: swizec.com/blog

Want to brush up on your modern JavaScript syntax? Check out my interactive cheatsheet: es6cheatsheet.com

By the way, just in case no one has told you it yet today: I love and appreciate you for who you are ❤️