Once upon a time, I made a brilliant decision: We're going to make a new background worker for every analytics event on our platform.

The goal was simple: have real-time analytics, don't be bound to a single provider, keep local copies of all events in case something goes wrong.

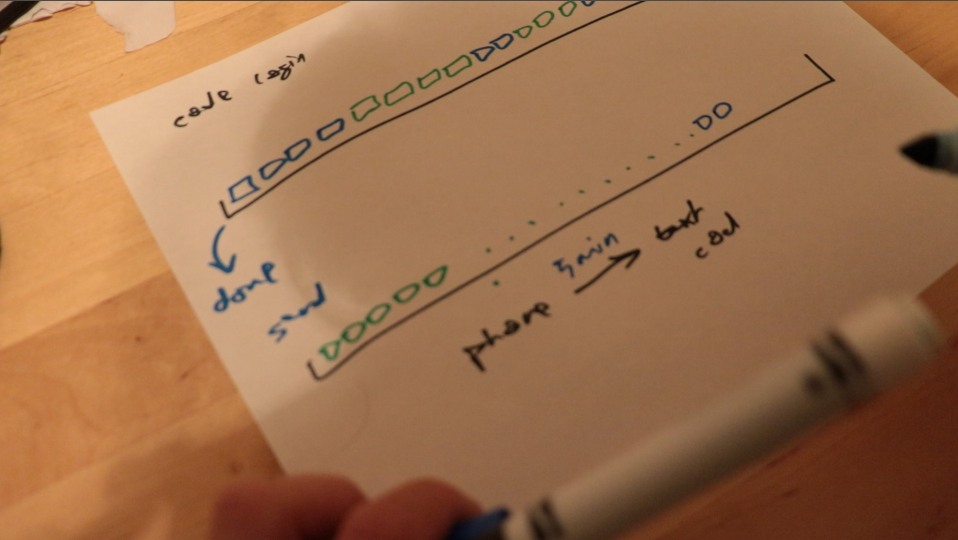

I designed a system that does that. Clients (web and mobile) send events to our server, our server stores them in the database and creates a new worker on the queue. That worker runs, sends the event to Mixpanel, and marks it as sent.

With this design, we can easily switch to a different provider. Just change the call on our backend, clients keep the same API. Wonderful.

If anything goes wrong when talking to Mixpanel, or if we need to run a complex query involving events and the rest of our data, we can. All events are stored. Wonderful.

Job well done.

Until one day Yup got a lot of traffic.

No, the analytics didn't stop working. Our database didn't get flooded. Oh no. Don't you worry about our database. Postgres can handle hundreds of millions of rows. Postgres is a champ.

No, no. What happened was that our "We send code to your phone to log in" system stopped sending out codes. 2 minutes, 3 minutes, sometimes an hour late. Often ten codes at a time would arrive to the same person because they retried so often. Codes were out of sequence, too.

Wait what?

A-ha! Let me explain!

You see, we use Sidekiq to queue our background jobs. It assigns all your tasks to a default queue unless you specify.

Somewhere along the line, we got lazy and we stopped specifying. Everything went to the same queue and it worked great. Until we had traffic.

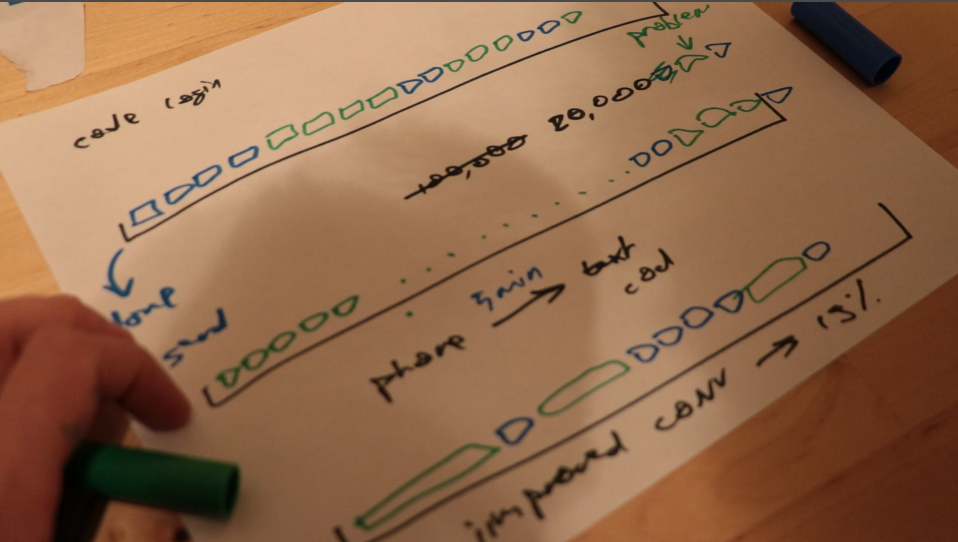

Once we had traffic, our analytics workers filled up the queue. Users created tens of them before they even got to the login screen. Others were creating hundreds past the login screen.

At one point, we estimate we had almost 20,000 jobs stuck in the queue. Most of them were analytics. Some of them were business critical. Many of them were waiting to let people login and try our service.

😅

How do you fix this?

With a for loop.

Let me explain.

Say you're sending each event in its own worker. The job itself is really fast. It takes less than 1 second to execute. But scheduling that job, keeping it around, making a worker to run it, executing the Ruby on Rails machinery… all that takes time. A lot of time.

But instead of being eager and opportunistic, you can send events in batches. Say a worker wakes up every 5 minutes and sends all events from the past 5 minutes that haven't been sent yet.

Now you have a worker that takes longer to run. Several seconds, even a few minutes.

But you run only 30 jobs per hour instead of 10,000.

The downside? Instead of real-time analytics, you have to wait 5 minutes to see what's up.

How often do you check those charts anyway? Once a day? Every hour? Pretty sure you're not checking every two seconds.

Code send times dropped from an average of 3 to 4 minutes to an average of about 10 seconds. Sometimes 20.

Our conversion rate from downloading Yup to having a trial increased by 19%. All from a 5-line for loop. Boom.

Continue reading about That one time a simple for-loop increased conversions by 19%

Semantically similar articles hand-picked by GPT-4

- Logging 1,721,410 events per day with Postgres, Rails, Heroku, and a bit of JavaScript

- How our engineering team got 12x faster using these 5 lessons about integrating 3rd-party services

- How to DDoS yourself with analytics –– a war story

- Lesson learned, test your migrations on the big dataset

- 90% of performance is data access patterns

Learned something new?

Read more Software Engineering Lessons from Production

I write articles with real insight into the career and skills of a modern software engineer. "Raw and honest from the heart!" as one reader described them. Fueled by lessons learned over 20 years of building production code for side-projects, small businesses, and hyper growth startups. Both successful and not.

Subscribe below 👇

Software Engineering Lessons from Production

Join Swizec's Newsletter and get insightful emails 💌 on mindsets, tactics, and technical skills for your career. Real lessons from building production software. No bullshit.

"Man, love your simple writing! Yours is the only newsletter I open and only blog that I give a fuck to read & scroll till the end. And wow always take away lessons with me. Inspiring! And very relatable. 👌"

Have a burning question that you think I can answer? Hit me up on twitter and I'll do my best.

Who am I and who do I help? I'm Swizec Teller and I turn coders into engineers with "Raw and honest from the heart!" writing. No bullshit. Real insights into the career and skills of a modern software engineer.

Want to become a true senior engineer? Take ownership, have autonomy, and be a force multiplier on your team. The Senior Engineer Mindset ebook can help 👉 swizec.com/senior-mindset. These are the shifts in mindset that unlocked my career.

Curious about Serverless and the modern backend? Check out Serverless Handbook, for frontend engineers 👉 ServerlessHandbook.dev

Want to Stop copy pasting D3 examples and create data visualizations of your own? Learn how to build scalable dataviz React components your whole team can understand with React for Data Visualization

Want to get my best emails on JavaScript, React, Serverless, Fullstack Web, or Indie Hacking? Check out swizec.com/collections

Did someone amazing share this letter with you? Wonderful! You can sign up for my weekly letters for software engineers on their path to greatness, here: swizec.com/blog

Want to brush up on your modern JavaScript syntax? Check out my interactive cheatsheet: es6cheatsheet.com

By the way, just in case no one has told you it yet today: I love and appreciate you for who you are ❤️