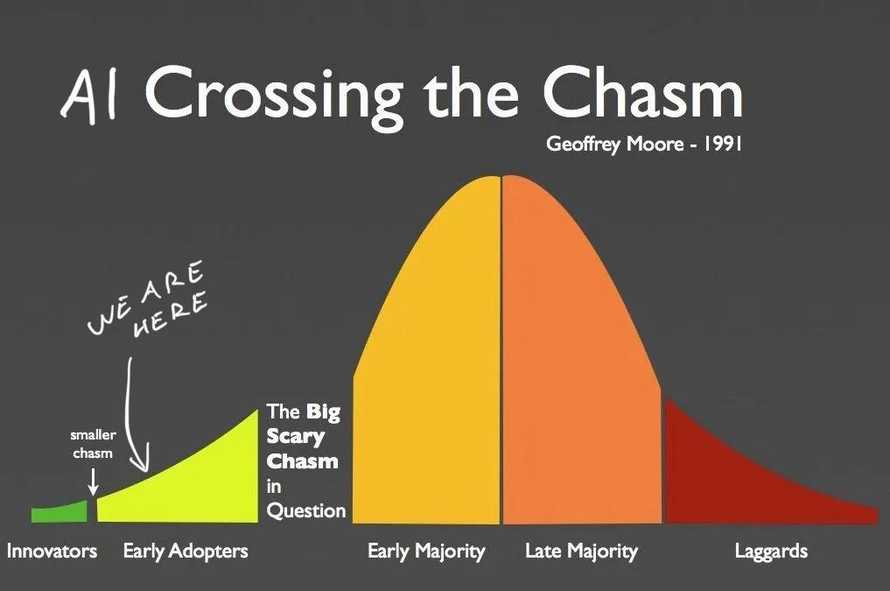

Generative AI is entering the next phase of Moore's technology adoption cycle – early adopters. Lots of people have been asking me for the quickest and cheapest way to get started. I'm writing this article so there's a link.

Why start now

We are here:

Last year was the innovator gold rush. Before that was the pre-innovator research phase. We didn't really know what to do with large language models until someone figured out instruction tuning.

Now that you can say "hey AI do this thing" it suddenly all feels useful and amazing. Although you should tamper your expectations [name|], it's like talking to a wicked smart intern who makes lots of little mistakes.

But the output is useful. Mistakes and all. The field has come a long way since January 2023. Something about investors pouring $50 billion into AI just last year.

AI is useful, but far from perfect

At work I've used AI to summarize NPS surveys into high level summaries, write database migration scripts 🤫, and help with leet code puzzles that aren't worth my time. Like "write a script that iterates a directory".

For personal use I've:

- built a Swiz chatbot that made me feel deeply understood but wasn't interesting to anyone else – that's the Barnum effect

- a related articles feature for swizec.com

- made a tool that writes SEO descriptions for articles, tells me if an article makes sense, suggests improvements, and drafts a twitter thread

- built a script to analyze reader feedback

The copywriting is not good. Comes out squishy and boring to read. But it makes a great first draft. The summaries are fantastic when interpreted by a human who understands the material, and the code generation is pretty sweet after you fix a small bug or two.

Easiest way to start

Here's the easiest way I've found to try if AI can do something. No license keys, no APIs, no signup. Everything runs locally on your machine.

- You get Ollama for your machine. Supports Mac, Linux, and Windows

- You grab ollama-js or ollama-python depending on preference

- You download a model. I suggest trying

ollama pull llama2first - You fire up a script and run the model 👇

import ollama from "ollama"

const response = await ollama.generate({

model: "llama2",

prompt: "<your prompt here>",

systemPrompt: "You are <persona> helping <persona> with <task>",

stream: true,

})

for await (const part of testimonial) {

process.stdout.write(part.response)

}

This runs the llama2 model on your machine, asks your question, and streams back the response word by word. If you're okay waiting in silence while the AI churns, you can set stream: false and console.log the response.

I like to run these with Bun. It's the easiest way to run JavaScript CLI scripts.

Don't be afraid to experiment

You'll want to try a few different prompts. Word your question in different ways and see what works. Try different formatting too. The models seem to like markdown but sometimes simpler is better.

In AI papers they call this "The prompt was discovered empirically". Everyone's just trying shit until it works 😉

RAG is your friend

Retrieval Augmented Generation – RAG – is your friend. If you want to analyze documents, plop that bad boy right in your prompt and ask the model to analyze. Here is a document about blah. What does it say about blah?\n\n<document body> works wonders.

If you get nonsense responses, make sure your prompt includes the full body of your text. Not [object Object]. Happens to me every time. I like to print the full prompt before passing it into the model.

When you start having more content than fits the model's attention span this turns into a search problem. You'll have to get clever about how you choose relevant document fragments to include in the prompt.

Try different models

Ollama is a large language model runner and model collection optimized for consumer hardware. The models that run on your machine aren't as good as the commercial stuff that requires huge GPUs to run.

But it's free and it's simple. That's why I like it for prototyping.

llama2 is in my experience the best general language model that gets close in performance to ChatGPT. Great for summaries and okay at writing. Struggles with code.

Try other models. They have specialties! You'll have to change the model: param in your generation call and you might have to change the prompt. Different models like to be spoken to differently.

Caveats

Using Ollama is great for local experiments and making sure what you want is possible. Fast iteration cycles if you have a strong computer and no faffing around with cloud.

But it can be damn slow on an old computer.

I may have found an excuse to buy a new computer pic.twitter.com/l0WZNIgaR8

— Swizec Teller (@Swizec) March 3, 2024

And it's very much not production ready. You'll have to take your code and adapt to run in the cloud or call an API before you can ship to more than 1 user at a time.

An example

If you'd like an example, here's a script I've been tinkering with to analyze newsletter and workshop feedback 👉 analyze-feedback script. Readme in progress :)

Cheers,

~Swizec

Continue reading about How to start playing with generative AI

Semantically similar articles hand-picked by GPT-4

- Building apps with OpenAI and ChatGPT

- Coaching AI to write your code

- It's never been this easy to build a webapp

- AI Engineer Summit report

- That time serverless melted my credit card

Learned something new?

Read more Software Engineering Lessons from Production

I write articles with real insight into the career and skills of a modern software engineer. "Raw and honest from the heart!" as one reader described them. Fueled by lessons learned over 20 years of building production code for side-projects, small businesses, and hyper growth startups. Both successful and not.

Subscribe below 👇

Software Engineering Lessons from Production

Join Swizec's Newsletter and get insightful emails 💌 on mindsets, tactics, and technical skills for your career. Real lessons from building production software. No bullshit.

"Man, love your simple writing! Yours is the only newsletter I open and only blog that I give a fuck to read & scroll till the end. And wow always take away lessons with me. Inspiring! And very relatable. 👌"

Have a burning question that you think I can answer? Hit me up on twitter and I'll do my best.

Who am I and who do I help? I'm Swizec Teller and I turn coders into engineers with "Raw and honest from the heart!" writing. No bullshit. Real insights into the career and skills of a modern software engineer.

Want to become a true senior engineer? Take ownership, have autonomy, and be a force multiplier on your team. The Senior Engineer Mindset ebook can help 👉 swizec.com/senior-mindset. These are the shifts in mindset that unlocked my career.

Curious about Serverless and the modern backend? Check out Serverless Handbook, for frontend engineers 👉 ServerlessHandbook.dev

Want to Stop copy pasting D3 examples and create data visualizations of your own? Learn how to build scalable dataviz React components your whole team can understand with React for Data Visualization

Want to get my best emails on JavaScript, React, Serverless, Fullstack Web, or Indie Hacking? Check out swizec.com/collections

Did someone amazing share this letter with you? Wonderful! You can sign up for my weekly letters for software engineers on their path to greatness, here: swizec.com/blog

Want to brush up on your modern JavaScript syntax? Check out my interactive cheatsheet: es6cheatsheet.com

By the way, just in case no one has told you it yet today: I love and appreciate you for who you are ❤️